| Chatbots sometimes make things up. Is AI's hallucination problem fixable?02-08-2023 02:45 |

HarveyH55 ★★★★★ ★★★★★

(5197) |

LINK

Chatbots sometimes make things up. Is AI's hallucination problem fixable?

By MATT O'BRIEN

AP Technology Writer

Spend enough time with ChatGPT and other artificial intelligence chatbots and it doesn't take long for them to spout falsehoods.

Described as hallucination, confabulation or just plain making things up, it's now a problem for every business, organization and high school student trying to get a generative AI system to compose documents and get work done. Some are using it on tasks with the potential for high-stakes consequences, from psychotherapy to researching and writing legal briefs.

"I don't think that there's any model today that doesn't suffer from some hallucination," said Daniela Amodei, co-founder and president of Anthropic, maker of the chatbot Claude 2.

"They're really just sort of designed to predict the next word," Amodei said. "And so there will be some rate at which the model does that inaccurately."

Anthropic, ChatGPT-maker OpenAI and other major developers of AI systems known as large language models say they're working to make them more truthful.

How long that will take — and whether they will ever be good enough to, say, safely dole out medical advice — remains to be seen.

"This isn't fixable," said Emily Bender, a linguistics professor and director of the University of Washington's Computational Linguistics Laboratory. "It's inherent in the mismatch between the technology and the proposed use cases."

A lot is riding on the reliability of generative AI technology. The McKinsey Global Institute projects it will add the equivalent of $2.6 trillion to $4.4 trillion to the global economy. Chatbots are only one part of that frenzy, which also includes technology that can generate new images, video, music and computer code. Nearly all of the tools include some language component.

Google is already pitching a news-writing AI product to news organizations, for which accuracy is paramount. The Associated Press is also exploring use of the technology as part of a partnership with OpenAI, which is paying to use part of AP's text archive to improve its AI systems.

In partnership with India's hotel management institutes, computer scientist Ganesh Bagler has been working for years to get AI systems, including a ChatGPT precursor, to invent recipes for South Asian cuisines, such as novel versions of rice-based biryani. A single "hallucinated" ingredient could be the difference between a tasty and inedible meal.

When Sam Altman, the CEO of OpenAI, visited India in June, the professor at the Indraprastha Institute of Information Technology Delhi had some pointed questions.

"I guess hallucinations in ChatGPT are still acceptable, but when a recipe comes out hallucinating, it becomes a serious problem," Bagler said, standing up in a crowded campus auditorium to address Altman on the New Delhi stop of the U.S. tech executive's world tour.

"What's your take on it?" Bagler eventually asked.

Altman expressed optimism, if not an outright commitment.

"I think we will get the hallucination problem to a much, much better place," Altman said. "I think it will take us a year and a half, two years. Something like that. But at that point we won't still talk about these. There's a balance between creativity and perfect accuracy, and the model will need to learn when you want one or the other."

But for some experts who have studied the technology, such as University of Washington linguist Bender, those improvements won't be enough.

Bender describes a language model as a system for "modeling the likelihood of different strings of word forms," given some written data it's been trained upon.

It's how spell checkers are able to detect when you've typed the wrong word. It also helps power automatic translation and transcription services, "smoothing the output to look more like typical text in the target language," Bender said. Many people rely on a version of this technology whenever they use the "autocomplete" feature when composing text messages or emails.

The latest crop of chatbots such as ChatGPT, Claude 2 or Google's Bard try to take that to the next level, by generating entire new passages of text, but Bender said they're still just repeatedly selecting the most plausible next word in a string.

When used to generate text, language models "are designed to make things up. That's all they do," Bender said. They are good at mimicking forms of writing, such as legal contracts, television scripts or sonnets.

"But since they only ever make things up, when the text they have extruded happens to be interpretable as something we deem correct, that is by chance," Bender said. "Even if they can be tuned to be right more of the time, they will still have failure modes — and likely the failures will be in the cases where it's harder for a person reading the text to notice, because they are more obscure."

Those errors are not a huge problem for the marketing firms that have been turning to Jasper AI for help writing pitches, said the company's president, Shane Orlick.

"Hallucinations are actually an added bonus," Orlick said. "We have customers all the time that tell us how it came up with ideas — how Jasper created takes on stories or angles that they would have never thought of themselves."

The Texas-based startup works with partners like OpenAI, Anthropic, Google or Facebook parent Meta to offer its customers a smorgasbord of AI language models tailored to their needs. For someone concerned about accuracy, it might offer up Anthropic's model, while someone concerned with the security of their proprietary source data might get a different model, Orlick said.

Orlick said he knows hallucinations won't be easily fixed. He's counting on companies like Google, which he says must have a "really high standard of factual content" for its search engine, to put a lot of energy and resources into solutions.

"I think they have to fix this problem," Orlick said. "They've got to address this. So I don't know if it's ever going to be perfect, but it'll probably just continue to get better and better over time."

Techno-optimists, including Microsoft co-founder Bill Gates, have been forecasting a rosy outlook.

"I'm optimistic that, over time, AI models can be taught to distinguish fact from fiction," Gates said in a July blog post detailing his thoughts on AI's societal risks.

He cited a 2022 paper from OpenAI as an example of "promising work on this front." More recently, researchers at the Swiss Federal Institute of Technology in Zurich said they developed a method to detect some, but not all, of ChatGPT's hallucinated content and remove it automatically.

But even Altman, as he markets the products for a variety of uses, doesn't count on the models to be truthful when he's looking for information.

"I probably trust the answers that come out of ChatGPT the least of anybody on Earth," Altman told the crowd at Bagler's university, to laughter.

Pretty good article about why AI will never be 100% accurate, and chatbots will always give some weird responses. It's never really been the AI, but unrealistic expectations. Generative AI is designed to make shit up. It's not creativity, it's trying to match it's training, to a request. It's fine, and fairly accurate, if the request falls entirely with in the AI's training, and the training is only factual and accurate to begin with, which can never be the case with chatbots. The training is based on the belief humans never make mistakes. Humans make mistakes all the time, but we usually learn something, and have to figure a way to fix those mistakes. Sometimes those mistakes get turned it something useful.

I think mostly, the AI companies are going to make the mistakes less obvious. A task specific AI is pretty good, since it's training is confined to only what's needed to complete the task. Generative AI, is general purpose. A product anyone can use, for most any task. |

| 02-08-2023 18:51 |

Swan ★★★★★ ★★★★★

(5723) |

HarveyH55 wrote:

LINK

Chatbots sometimes make things up. Is AI's hallucination problem fixable?

By MATT O'BRIEN

AP Technology Writer

Spend enough time with ChatGPT and other artificial intelligence chatbots and it doesn't take long for them to spout falsehoods.

Described as hallucination, confabulation or just plain making things up, it's now a problem for every business, organization and high school student trying to get a generative AI system to compose documents and get work done. Some are using it on tasks with the potential for high-stakes consequences, from psychotherapy to researching and writing legal briefs.

"I don't think that there's any model today that doesn't suffer from some hallucination," said Daniela Amodei, co-founder and president of Anthropic, maker of the chatbot Claude 2.

"They're really just sort of designed to predict the next word," Amodei said. "And so there will be some rate at which the model does that inaccurately."

Anthropic, ChatGPT-maker OpenAI and other major developers of AI systems known as large language models say they're working to make them more truthful.

How long that will take — and whether they will ever be good enough to, say, safely dole out medical advice — remains to be seen.

"This isn't fixable," said Emily Bender, a linguistics professor and director of the University of Washington's Computational Linguistics Laboratory. "It's inherent in the mismatch between the technology and the proposed use cases."

A lot is riding on the reliability of generative AI technology. The McKinsey Global Institute projects it will add the equivalent of $2.6 trillion to $4.4 trillion to the global economy. Chatbots are only one part of that frenzy, which also includes technology that can generate new images, video, music and computer code. Nearly all of the tools include some language component.

Google is already pitching a news-writing AI product to news organizations, for which accuracy is paramount. The Associated Press is also exploring use of the technology as part of a partnership with OpenAI, which is paying to use part of AP's text archive to improve its AI systems.

In partnership with India's hotel management institutes, computer scientist Ganesh Bagler has been working for years to get AI systems, including a ChatGPT precursor, to invent recipes for South Asian cuisines, such as novel versions of rice-based biryani. A single "hallucinated" ingredient could be the difference between a tasty and inedible meal.

When Sam Altman, the CEO of OpenAI, visited India in June, the professor at the Indraprastha Institute of Information Technology Delhi had some pointed questions.

"I guess hallucinations in ChatGPT are still acceptable, but when a recipe comes out hallucinating, it becomes a serious problem," Bagler said, standing up in a crowded campus auditorium to address Altman on the New Delhi stop of the U.S. tech executive's world tour.

"What's your take on it?" Bagler eventually asked.

Altman expressed optimism, if not an outright commitment.

"I think we will get the hallucination problem to a much, much better place," Altman said. "I think it will take us a year and a half, two years. Something like that. But at that point we won't still talk about these. There's a balance between creativity and perfect accuracy, and the model will need to learn when you want one or the other."

But for some experts who have studied the technology, such as University of Washington linguist Bender, those improvements won't be enough.

Bender describes a language model as a system for "modeling the likelihood of different strings of word forms," given some written data it's been trained upon.

It's how spell checkers are able to detect when you've typed the wrong word. It also helps power automatic translation and transcription services, "smoothing the output to look more like typical text in the target language," Bender said. Many people rely on a version of this technology whenever they use the "autocomplete" feature when composing text messages or emails.

The latest crop of chatbots such as ChatGPT, Claude 2 or Google's Bard try to take that to the next level, by generating entire new passages of text, but Bender said they're still just repeatedly selecting the most plausible next word in a string.

When used to generate text, language models "are designed to make things up. That's all they do," Bender said. They are good at mimicking forms of writing, such as legal contracts, television scripts or sonnets.

"But since they only ever make things up, when the text they have extruded happens to be interpretable as something we deem correct, that is by chance," Bender said. "Even if they can be tuned to be right more of the time, they will still have failure modes — and likely the failures will be in the cases where it's harder for a person reading the text to notice, because they are more obscure."

Those errors are not a huge problem for the marketing firms that have been turning to Jasper AI for help writing pitches, said the company's president, Shane Orlick.

"Hallucinations are actually an added bonus," Orlick said. "We have customers all the time that tell us how it came up with ideas — how Jasper created takes on stories or angles that they would have never thought of themselves."

The Texas-based startup works with partners like OpenAI, Anthropic, Google or Facebook parent Meta to offer its customers a smorgasbord of AI language models tailored to their needs. For someone concerned about accuracy, it might offer up Anthropic's model, while someone concerned with the security of their proprietary source data might get a different model, Orlick said.

Orlick said he knows hallucinations won't be easily fixed. He's counting on companies like Google, which he says must have a "really high standard of factual content" for its search engine, to put a lot of energy and resources into solutions.

"I think they have to fix this problem," Orlick said. "They've got to address this. So I don't know if it's ever going to be perfect, but it'll probably just continue to get better and better over time."

Techno-optimists, including Microsoft co-founder Bill Gates, have been forecasting a rosy outlook.

"I'm optimistic that, over time, AI models can be taught to distinguish fact from fiction," Gates said in a July blog post detailing his thoughts on AI's societal risks.

He cited a 2022 paper from OpenAI as an example of "promising work on this front." More recently, researchers at the Swiss Federal Institute of Technology in Zurich said they developed a method to detect some, but not all, of ChatGPT's hallucinated content and remove it automatically.

But even Altman, as he markets the products for a variety of uses, doesn't count on the models to be truthful when he's looking for information.

"I probably trust the answers that come out of ChatGPT the least of anybody on Earth," Altman told the crowd at Bagler's university, to laughter.

Pretty good article about why AI will never be 100% accurate, and chatbots will always give some weird responses. It's never really been the AI, but unrealistic expectations. Generative AI is designed to make shit up. It's not creativity, it's trying to match it's training, to a request. It's fine, and fairly accurate, if the request falls entirely with in the AI's training, and the training is only factual and accurate to begin with, which can never be the case with chatbots. The training is based on the belief humans never make mistakes. Humans make mistakes all the time, but we usually learn something, and have to figure a way to fix those mistakes. Sometimes those mistakes get turned it something useful.

I think mostly, the AI companies are going to make the mistakes less obvious. A task specific AI is pretty good, since it's training is confined to only what's needed to complete the task. Generative AI, is general purpose. A product anyone can use, for most any task.

Ai does not have the power or ability to make thinks up, all it can do is to forward wrongly or oddly sourced information

IBdaMann claims that Gold is a molecule, and that the last ice age never happened because I was not there to see it. The only conclusion that can be drawn from this is that IBdaMann is clearly not using enough LSD.

According to CDC/Government info, people who were vaccinated are now DYING at a higher rate than non-vaccinated people, which exposes the covid vaccines as the poison that they are, this is now fully confirmed by the terrorist CDC

This place is quieter than the FBI commenting on the chink bank account information on Hunter Xiden's laptop

I LOVE TRUMP BECAUSE HE PISSES OFF ALL THE PEOPLE THAT I CAN'T STAND.

ULTRA MAGA

"Being unwanted, unloved, uncared for, forgotten by everybody, I think that is a much greater hunger, a much greater poverty than the person who has nothing to eat." MOTHER THERESA OF CALCUTTA

So why is helping to hide the murder of an American president patriotic?

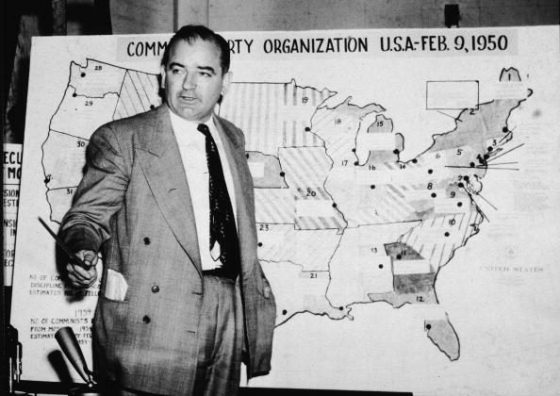

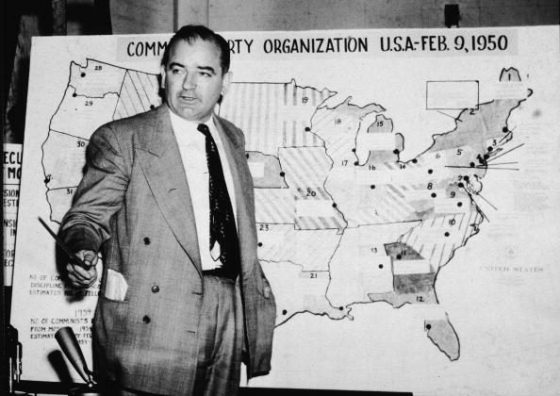

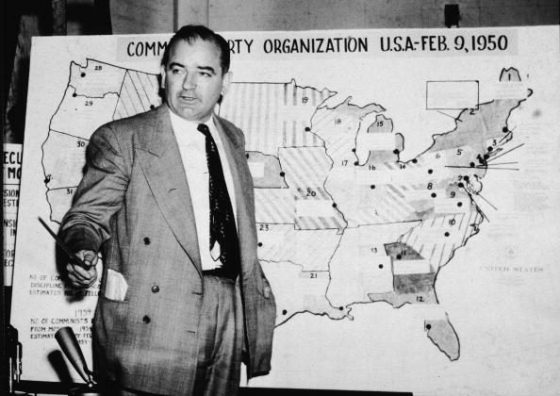

It's time to dig up Joseph Mccarthey and show him TikTok, then duck.

Now be honest, was I correct or was I correct? LOL

Edited on 02-08-2023 18:52 |

| 02-08-2023 20:35 |

IBdaMann ★★★★★ ★★★★★

(14420) |

Swan wrote:Ai does not have the power or ability to make thinks up,

Software can certainly make things up, that's what pseudorandom number generators are for. Stochastic weather systems fabricate parameters for weather systems. Video games create conditions and details for scenarios. Simulators make up challenges for the trainee. Chatbots can certainly generate "personal preference" details, being selective about sources ... and can choose (via pseudorandom number generation) to draw from totally erroneous sources. If the AI is creating an image, it will have to "make up" thousands of parameters. |

| 02-08-2023 21:03 |

Swan ★★★★★ ★★★★★

(5723) |

IBdaMann wrote:

Swan wrote:Ai does not have the power or ability to make thinks up,

Software can certainly make things up, that's what pseudorandom number generators are for. Stochastic weather systems fabricate parameters for weather systems. Video games create conditions and details for scenarios. Simulators make up challenges for the trainee. Chatbots can certainly generate "personal preference" details, being selective about sources ... and can choose (via pseudorandom number generation) to draw from totally erroneous sources. If the AI is creating an image, it will have to "make up" thousands of parameters.

Gibberish generators are just software running as it was designed and have nothing to do with ai.

You seem even more confused than normal

You may continue fingerpainting now

IBdaMann claims that Gold is a molecule, and that the last ice age never happened because I was not there to see it. The only conclusion that can be drawn from this is that IBdaMann is clearly not using enough LSD.

According to CDC/Government info, people who were vaccinated are now DYING at a higher rate than non-vaccinated people, which exposes the covid vaccines as the poison that they are, this is now fully confirmed by the terrorist CDC

This place is quieter than the FBI commenting on the chink bank account information on Hunter Xiden's laptop

I LOVE TRUMP BECAUSE HE PISSES OFF ALL THE PEOPLE THAT I CAN'T STAND.

ULTRA MAGA

"Being unwanted, unloved, uncared for, forgotten by everybody, I think that is a much greater hunger, a much greater poverty than the person who has nothing to eat." MOTHER THERESA OF CALCUTTA

So why is helping to hide the murder of an American president patriotic?

It's time to dig up Joseph Mccarthey and show him TikTok, then duck.

Now be honest, was I correct or was I correct? LOL |

| 03-08-2023 08:47 |

IBdaMann ★★★★★ ★★★★★

(14420) |

Swan wrote:You seem even more confused than normal

I want to be your jelly bean ... your chocolate-covered, bacon-wrapped, deep-fried, venison peanut butter jelly bean. I can be an eggplant jelly bean as well.

Swan wrote:You may continue fingerpainting now

I can be any color you want. Hey, were you expecting to just come over here, get down on your knees and slide me into your mouth? |

| 03-08-2023 13:20 |

Swan ★★★★★ ★★★★★

(5723) |

IBdaMann wrote:

Swan wrote:You seem even more confused than normal

I want to be your jelly bean ... your chocolate-covered, bacon-wrapped, deep-fried, venison peanut butter jelly bean. I can be an eggplant jelly bean as well.

Swan wrote:You may continue fingerpainting now

I can be any color you want. Hey, were you expecting to just come over here, get down on your knees and slide me into your mouth?

Claiming that you can be any color jelly bean is entirely possible due to the dynamics multiple personality disorder which is a rare form of schizophrenia.

So say hi to sybil for me

IBdaMann claims that Gold is a molecule, and that the last ice age never happened because I was not there to see it. The only conclusion that can be drawn from this is that IBdaMann is clearly not using enough LSD.

According to CDC/Government info, people who were vaccinated are now DYING at a higher rate than non-vaccinated people, which exposes the covid vaccines as the poison that they are, this is now fully confirmed by the terrorist CDC

This place is quieter than the FBI commenting on the chink bank account information on Hunter Xiden's laptop

I LOVE TRUMP BECAUSE HE PISSES OFF ALL THE PEOPLE THAT I CAN'T STAND.

ULTRA MAGA

"Being unwanted, unloved, uncared for, forgotten by everybody, I think that is a much greater hunger, a much greater poverty than the person who has nothing to eat." MOTHER THERESA OF CALCUTTA

So why is helping to hide the murder of an American president patriotic?

It's time to dig up Joseph Mccarthey and show him TikTok, then duck.

Now be honest, was I correct or was I correct? LOL |