Tornados 2 Weeks Before Christmas?

| 18-12-2021 06:31 | |

| IBdaMann (14395) |

Into the Night wrote:Ships are safer at sea when a big storm comes. That's why they set sail to get away from it. This is one of those sayings that isn't going away despite technological advancement. No ships sail anymore. Boats, yes, but ships no. Nonetheless, it's what we say they do. No one dials a phone number anymore. Nonetheless, it's what we say we do. Nobody tapes conversations anymore or gets audio or video on tape. Nonetheless, it's what we say we do. Nobody writes a report; nobody writes, period. Nonetheless, it's what we say we do. Soon, when all computer memory is SSD, we'll still be referring to data on "the disk." Words are great. . I don't think i can [define it]. I just kind of get a feel for the phrase. - keepit A Spaghetti strainer with the faucet running, retains water- tmiddles Clouds don't trap heat. Clouds block cold. - Spongy Iris Printing dollars to pay debt doesn't increase the number of dollars. - keepit If Venus were a black body it would have a much much lower temperature than what we found there.- tmiddles Ah the "Valid Data" myth of ITN/IBD. - tmiddles Ceist - I couldn't agree with you more. But when money and religion are involved, and there are people who value them above all else, then the lies begin. - trafn You are completely misunderstanding their use of the word "accumulation"! - Climate Scientist. The Stefan-Boltzman equation doesn't come up with the correct temperature if greenhouse gases are not considered - Hank :*sigh* Not the "raw data" crap. - Leafsdude IB STILL hasn't explained what Planck's Law means. Just more hand waving that it applies to everything and more asserting that the greenhouse effect 'violates' it.- Ceist |

| 18-12-2021 06:41 | |

| James___★★★★★ (5513) |

IBdaMann wrote:Into the Night wrote:Ships are safer at sea when a big storm comes. That's why they set sail to get away from it. So my p.c. doesn't have a hard drive, really? Maybe I like SSD because it's easier to work with programs like SketchUp? Ever try to do 3D rendering without SSD? Next you'll be saying a graphics card without a couple GB of memory doesn't matter. I've heard about people like you. p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB (I might actually have 2 Gigabyte) of memory. It really does make a difference with using some programs which includes rendering as with programs like Blender. HDs require a disk rotating and being read. SSDs don't have this problem. Rotating a disk creates lag. Edited on 18-12-2021 06:57 |

| 18-12-2021 07:53 | |

| IBdaMann (14395) |

James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB If you were able to purchase your computer with SSD then any graphics card that came with it would have, at a minimum, 2GB of memory. James___ wrote: It really does make a difference with using some programs which includes rendering as with programs like Blender. Today, yes. In the not too distant future, graphics cards will all but disappear entirely. Those functions will be built into microprocessors and thus will be much faster more powerful, mostly because calls won't have to leave the processor and the processor has full access to all system memory, i.e. it is not limited to a mere 2GB or other such fixed quantity of memory. This development is already happening. Intel's 11th generation i7, for example, already processes 4K natively and has 96 EUs (execution units) that work in parallel at really high clock speeds to perform shading, textures and all the visual effects that developers use in games and graphics-intensive applications. Over time, microprocessors will catch up to graphics cards in capabilities, and that will spell the end for graphics cards because microprocessors will deliver all the capability to laptops and cell phones without all the added bulk and power drain of a full-sized PC. . I don't think i can [define it]. I just kind of get a feel for the phrase. - keepit A Spaghetti strainer with the faucet running, retains water- tmiddles Clouds don't trap heat. Clouds block cold. - Spongy Iris Printing dollars to pay debt doesn't increase the number of dollars. - keepit If Venus were a black body it would have a much much lower temperature than what we found there.- tmiddles Ah the "Valid Data" myth of ITN/IBD. - tmiddles Ceist - I couldn't agree with you more. But when money and religion are involved, and there are people who value them above all else, then the lies begin. - trafn You are completely misunderstanding their use of the word "accumulation"! - Climate Scientist. The Stefan-Boltzman equation doesn't come up with the correct temperature if greenhouse gases are not considered - Hank :*sigh* Not the "raw data" crap. - Leafsdude IB STILL hasn't explained what Planck's Law means. Just more hand waving that it applies to everything and more asserting that the greenhouse effect 'violates' it.- Ceist |

| 18-12-2021 08:06 | |

| duncan61★★★★★ (2021) |

Love Gordon lightfoot.The wreck of the Edmund Fitzgerald.I am going to go out the back and play it on my I pad right now.A freak storm in Portsmouth harbour in the 1700s did more damage to the British navy than any other nation ever had.If that happened now guess what would be blamed? |

| 18-12-2021 08:22 | |

| Into the Night (21592) |

IBdaMann wrote:Into the Night wrote:Ships are safer at sea when a big storm comes. That's why they set sail to get away from it. There is actually some discussion to use sails for large ships (cargo container ships) to prevent global warming. IBdaMann wrote: SSD's are still referred to as 'disks'. Even a USB stick is sometimes referred to as a 'disk'. NVM sticks are also referred to as a 'disk'. Such is English. We drive on parkways and park on driveways. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan Edited on 18-12-2021 08:24 |

| 18-12-2021 08:26 | |

| Into the Night (21592) |

James___ wrote:IBdaMann wrote:Into the Night wrote:Ships are safer at sea when a big storm comes. That's why they set sail to get away from it. NOT rotating a disk creates even MORE lag! The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 08:42 | |

| Into the Night (21592) |

IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB Indeed. AMD is producing similar stuff. The graphics output connector will simply a bracket that connects to pins coming out of the processor directly. However, there are cases where developers want a graphics card that can serve multiple screens in Xinerama format (like one big desktop across multiple monitors). New graphics cards can deliver 6 or even 8 such channels already. Of course, most users don't want a wall of monitors in front of them. But developers often do, and it's a cinch to construct those multimonitor display signs you see in hotels, casinos, cruise ships, or even McDonald's. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 08:50 | |

| Into the Night (21592) |

duncan61 wrote: The same kind of thing happened when Russia came marauding into Japan for the umpteenth time. A storm came up and sank the entire Russian fleet heading to Japan. The Japanese practice the beliefs of Shinto. Among the elemental spirits of Shinto, which are called 'Kaze', are of course the elements of wind, fire, water, earth and spirit. They call the wind spirit 'Kame'. Thus the word 'Kamekaze', meaning 'divine wind'. The wind they thanked for sinking the Russian fleet. Much later, during WW2, the kamekaze were of course the suicide pilots sent to deliver Japan again from her enemies as the 'divine wind'. One of the last acts of a desperate nation that was already losing the war. Today, Japan has found the answer to world peace. Profit. It has lost it's war mongering ways, and now spends it's military on defending Japan from Chinese or Russian aggression. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan Edited on 18-12-2021 08:54 |

| 18-12-2021 08:51 | |

| IBdaMann (14395) |

duncan61 wrote: Great song, and an educational documentary as well. Before you listen to the song, check out these links: The legend lives on from the Chippewa on down Of the big lake they called 'gitche gumee' With a load of iron ore twenty-six thousand tons more Than the Edmund Fitzgerald weighed empty The ship was the pride of the American side Coming back from some mill in Wisconsin As the big freighters go, it was bigger than most With a crew and good captain well seasoned The searches all say they'd have made Whitefish Bay If they'd put fifteen more miles behind her In a musty old hall in Detroit they prayed, In the maritime sailors' cathedral |

| 18-12-2021 09:00 | |

| Into the Night (21592) |

IBdaMann wrote:duncan61 wrote: Nicely done. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 09:40 | |

| IBdaMann (14395) |

Into the Night wrote:However, there are cases where developers want a graphics card that can serve multiple screens in Xinerama format (like one big desktop across multiple monitors). New graphics cards can deliver 6 or even 8 such channels already. I hear you. Totally true ... today. Future motherboards will come with multiple video ports fed by the main processor, something similar to this:  |

| 18-12-2021 09:40 | |

| IBdaMann (14395) |

Into the Night wrote:However, there are cases where developers want a graphics card that can serve multiple screens in Xinerama format (like one big desktop across multiple monitors). New graphics cards can deliver 6 or even 8 such channels already. I hear you. Totally true ... today. Future motherboards will come with multiple video ports fed by the main processor, something similar to this:  Edit: I just checked, the i7 has the bandwidth (capacity) to power four 4K monitors. It just doesn't have the ports. At my work, I use a docking station to use my i7 laptop to power three 4K monitors at the same time. . Edited on 18-12-2021 10:04 |

| 18-12-2021 10:19 | |

| HarveyH55 (5197) |

IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB Doubtful. High resolution graphics will always be a separate specialty market. Most people won't need it, or the higher cost. Graphics cards have their own processor(s)/memory that handle a lot of CPU intensive work, leaving the main computer free for other tasks. The more crap you pack into a CPU, the greater the power usage. For battery operated devices, you'll need larger batteries, or settle for short run time. Packing higher resolution on a small screen doesn't really make a huge difference. It's still only going to display at the resolution of the pixel count available on the display. Most portable devices are basically used as terminals. Request and display, what's already been rendered on a more powerful computer. |

| 18-12-2021 11:34 | |

| IBdaMann (14395) |

HarveyH55 wrote:Doubtful. It's a certainty, unless technology ceases to develop. HarveyH55 wrote: High resolution graphics will always be a separate specialty market. Nope. High-resolution graphics will always be in huge demand, there just isn't any reason they need to remain external to the microprocessor. The bulk and the power drain are negatives ... and unnecessary. They will go away. It's already underway. HarveyH55 wrote:Most people won't need it, This isn't really the issue. Normal technological development will simply push the functions performed by today's graphics cards onto the processor, at which point graphics development will simply be included in the microprocessor engineering instead of engineering of some unnecessary additional piece of hardware. Motherboards will eventually drop the graphics card slot. I'm not saying that graphics are somehow going away. They are simply moving to different hardware ... to become more and more efficient, draw less and less power, and to become ever faster. Imagine graphics cards becoming smaller and smaller over time ... and just naturally coexisting with the main processor ... and ultimately being integrated with the chip. It's going to happen and is already underway. HarveyH55 wrote: Graphics cards have their own processor(s)/memory that handle a lot of CPU intensive work, ... and each new generation of microprocessor is more powerful than the previous. As I have already mentioned, the big processor companies are already building new, separate graphics hardware into those new, more powerful chips, and it's just a matter of time before that new graphics hardware being added is more than powerful enough to handle many high-resolution monitors that are playing grpahics-intensive games/applications. Also, each generation of motherboard is capable of packing on greater amounts of system memory, and it is both so much more efficient and powerful to just let a processor directly utilize the 20GB of system memory it needs for whatever graphics-intensive application it might be processing than to falter with a separate graphics card whose much smaller fixed amount of "dedicated" memory isn't sufficient in the first place and is further hindered by being a separate device that requires overhead. HarveyH55 wrote: leaving the main computer free for other tasks. The graphics card will be the limiting factor. The optimal configuration is a main processor that has direct access to all resources as needed whenever needed, and doesn't have to outsource anything outside the processor to process it. HarveyH55 wrote: The more crap you pack into a CPU, the greater the power usage. Yes, but the overall power reduction is huge when eliminating the vast power drain of a graphics card and instead converting that to a very minor increase consumed by the additions to the main processor. It's night and day. Of course, a chip with graphics capability fits into the thinnest, lightest laptops. Only the biggest, bulkiest laptops with little hope for battery life will support, at most, a small graphics card. HarveyH55 wrote:For battery operated devices, you'll need larger batteries, or settle for short run time. That's not turning out to be the case. Like I said, my laptop can process 4K video and it is very thin and has great battery life. Things will only get better, not worse. Remember that once upon a time people said the exact same thing about sound cards, even as 16-bit sound was becoming standard in all microprocessors. HarveyH55 wrote: Packing higher resolution on a small screen doesn't really make a huge difference. People such as myself want the graphics power for rendering graphic images quickly and I'm glad my chip can do it. HarveyH55 wrote:It's still only going to display at the resolution of the pixel count available on the display. I get it. Focus on the processing power. Regardless of the display resolution, the computer must render all the images that it must display. That's what you want to work well. HarveyH55 wrote:Most portable devices are basically used as terminals. The idea is for all portable devices to be fully graphics capable. It appears that's the inevitable technological forecast. Powerful graphics functionality will become standard on chips and will become standard in portable devices. It's already underway. I do think graphics cards will be around for a while. They will be going away, however. |

| 18-12-2021 15:16 | |

| duncan61★★★★★ (2021) |

And the bells ring 29 times for the men of the Edmundson Fitzgerald |

| 18-12-2021 18:34 | |

| IBdaMann (14395) |

duncan61 wrote: ... who were never recovered because Gicha'goomah never gives up her dead. The whole story is eerie. Nothing happened to the Arthur Anderson that was trailing the Edmund Fitzgerald and that noticed the Edmund Fitzgerald's lights just weren't there anymore. |

| 18-12-2021 19:54 | |

| Into the Night (21592) |

IBdaMann wrote:Into the Night wrote:However, there are cases where developers want a graphics card that can serve multiple screens in Xinerama format (like one big desktop across multiple monitors). New graphics cards can deliver 6 or even 8 such channels already. Probably not for quite awhile yet. Indeed, we don't even know what video feeds are going to be in use by that time. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 19:58 | |

| Into the Night (21592) |

HarveyH55 wrote:IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB The problem with laptop batteries is that they still powering a micro-coded processor. The power hungry Intel line is the worst of that. AMD is slightly better, but all micro-coded processors are power hungry. The real solution for longer battery life will be to move to base coded processors, such as the ARM design. This is the processor typically used in cell phones now. Windows has trouble running on ARM. Linux has no trouble with it though. The GPU is a bit of another story, but improvements are being made on them as well. ARM designs have no GPU built into them. It simply becomes another chip on the motherboard. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan Edited on 18-12-2021 19:59 |

| 18-12-2021 20:00 | |

| Into the Night (21592) |

HarveyH55 wrote:IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB I already said this. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 20:04 | |

| Into the Night (21592) |

IBdaMann wrote:HarveyH55 wrote:Doubtful. There is no 'graphics card slot' in a PC. There never was. The PCIe interface isn't going away either. It can be used for graphics, additional network cards, and even a direct interface to mass storage (one form of NVM storage). It's faster than SATA. In other words, you can plug ANYTHING into a PCIe slot. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 18-12-2021 20:14 | |

| Into the Night (21592) |

IBdaMann wrote:duncan61 wrote: It's possible the Edmund Fitzgerald went down due to cargo liquifaction. This can happen to ore ships if there is too much moisture in the ore being loaded. It will sink a ship quickly. For this reason ship captains are normally very concerned about the ore being loaded. Sometimes, they are lied to, or they make a bad decision to load the ore anyway. It doesn't take much extra moisture to cause a major risk of liquifaction. Once underway, wave action can start an internal wave action within the ore of the ship. The loaded ore actually starts behaving like a semi-liquid. Eventually the ore winds up on one side of the hold, and the ship will capsize and go down. It's pretty quick. About all the crew has time to do is get out a Mayday call. We don't know what actually happened to cause the Edmund Fitzgerald to go down in that storm. Liquifaction can blow out the hatchways, which is why that detail was probably included in the Mayday call. This may have been the cause of the disaster, but no one really knows for sure. Any way you look at it, Gicha'goomah claimed her. A sad and true story. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan Edited on 18-12-2021 20:18 |

| 18-12-2021 21:12 | |

| HarveyH55 (5197) |

IBdaMann wrote:HarveyH55 wrote:Doubtful. Single board computers have been around for a while. Not many even have expansion ports to add cards anymore. Mostly, because they want you to buy whole new computer, the more expensive models, rather than upgrading/repairing yourself. There have been dedicated chips for a long time that handle graphics functions. There are several other chips on the motherboard, that assist the CPU with other functions. These co-processors do a lot more than computations. It's not always having to offload tasks, it's better to have the functions and features handled separately. Timing is everything for some applications. A super-fast processor isn't always useful, if it's need for many tasks. Your storage devices, display, speakers, camera, keyboard, all work best at different clock speeds. Most are very slow, compared to the CPU. The CPU handles math really well, peripherals are a little different story. There are limits to how much, and how tightly you pack components on a piece of silicon. The more you have going on at the same time, the more thermal management becomes critical. You still need to keep it cool, or it destroys itself. There is always people wanting to push the limits, develop something faster, more powerful, smaller. Not always practical, often fail. But, it's a challenge, and new products still get made. Not all make it to market, or do well. I work with microcontrollers, which are similar to what you are getting at. Mostly, the ones I use, are way overkill for the projects I do. But, for around $2.00, it save me a whole lot of separate parts, and build time. Most portable devices these days, basically are just web interfaces. Access the internet. Most of the processor intensive work is done by the remote computers. You send input over the internet, you receive the results and display it on the screen. It's really cheap and simple, no need to over complicate, over power these devices. Just faster, more reliable communication links. Those that create content, is still a niche, not the general market, and will pay a lot more for better tools. I do 3d graphics, and it's pretty slow going on my computers, since I'm cheap, and don't do a lot of it. Got back into it some, when I bought a 3d printer. Not so much the rendering anymore, but I do need to do a shaded preview. Much quicker than full color and textured, but far from real-time. 3d printing is disappointing, takes hours, and parts are weak, barely, if ever useful. Takes a lot of tweaking to get right. But, it still works well enough to print models, which I can make a silicone mold from, and cast in metal. I don't doubt that people can print useful parts, but it takes many hours, and failed parts, to get everything tuned in. Each part is different. Each spool of filament is different enough, to need adjustments. Even when you bought the same filament before, same brand, same type, same vendor... I don't have that much free time, or patience to invest. |

| 20-12-2021 00:15 | |

| James___★★★★★ (5513) |

duncan61 wrote: Where you live in Perth is an idyllic spot. It's not representative of the rest of the world. |

| 20-12-2021 09:58 | |

| Into the Night (21592) |

HarveyH55 wrote:IBdaMann wrote:HarveyH55 wrote:Doubtful. Most computers aren't even recognized as computers. Such things as your cell phone, a digital thermostat, the computer in your car that runs the engine, the traffic light, the webcam, the TV set, the Blueray player, digital timers, digital controls on washing machines, dryers, and ovens. Microwave timers, ticketing machines, pinball games, GPS devices, Christmas light sequencers, digital voltmeters, keyboards, regulatory systems in almost any mill such as a paper mill, wastewater plant, gravel pit, lumber mill, etc. Many of these have no fans or even heat sinks. Many are ARM based. Some are PIC based. These base coded processors don't generate heat like the micro-coded processors do, such as the main CPU found in your typical PC. My PC is water cooled. Much quieter. I don't need to dial in my 3d printer for each spool I put in it. I suspect you are having trouble with your slicing software. Most 3d printing is not meant to be production fabrication. It is meant for prototyping. Once you have it right, people typically turn to injection molding to mass produce the object (if it's in plastic) or to CNC machining if something else, or to cast molding. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 21-12-2021 08:15 | |

| ellisael☆☆☆☆☆ (10) |

wow this has been an extremely enlightening discussion- looking forward to more as a new entree |

| 21-12-2021 16:05 | |

| James___★★★★★ (5513) |

Into the Night wrote:IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB After market graphic cards will always be an improvement. Built in graphics will mostly be for streaming YouTube and stuff like that. When people see demonstrations of drawing on a tablet, that is a 2 dimensional model. That requires much less memory and allows for a slower process than anything done in 3D. |

| 21-12-2021 16:20 | |

| James___★★★★★ (5513) |

As with holes in the ozone layer, over Antarctica they happen during the southern hemisphere's summer. When a hole over the Arctic happens, it is during the southern hemisphere's summer. The difference is that Antarctica is exposed to direct solar radiation because the Van Allen radiation belts aren't shielding it and with the Arctic, it's much less exposed. Please be mindful that we're talking about a layer of the atmosphere that starts at about 12 km/7.5 miles up. |

| 21-12-2021 16:41 | |

| IBdaMann (14395) |

James___ wrote:As with holes in the ozone layer, over Antarctica they happen during the southern hemisphere's summer. Nope. Over its winter, not summer. James___ wrote:When a hole over the Arctic happens, it is during the southern hemisphere's summer. Yes. James___ wrote:The difference is that Antarctica is exposed to direct solar radiation because the Van Allen radiation belts aren't shielding it and with the Arctic, it's much less exposed. Is that because the polarization of the magnetosphere accelerates the momentum of the sun's radiation along its radial eigenvector? This is really easy stuff. It isn't like it's rocket science brain surgery astrophysics! How do you not know this? Someday my project will work out and will draw attention to my not having a life in Norway because of real Americans. . |

| 21-12-2021 18:20 | |

| James___★★★★★ (5513) |

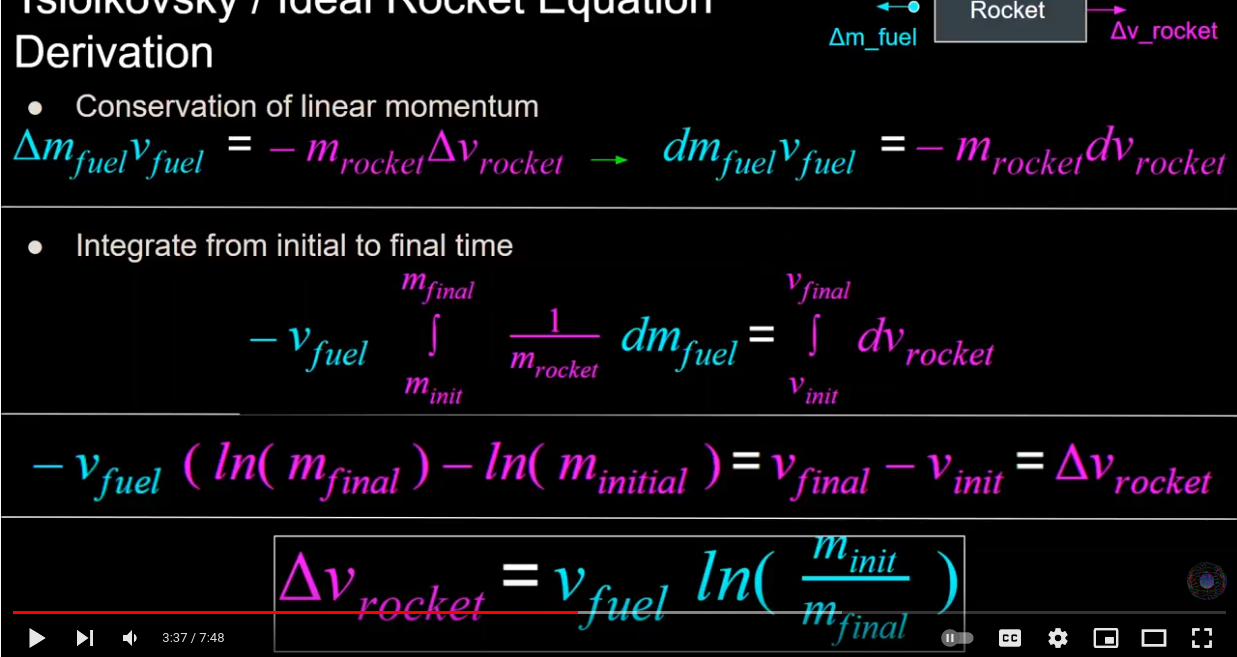

IBdaMann wrote:James___ wrote:As with holes in the ozone layer, over Antarctica they happen during the southern hemisphere's summer. Nope. Rocket science is more along the lines of Attached image:

Edited on 21-12-2021 18:26 |

| 21-12-2021 18:32 | |

| James___★★★★★ (5513) |

With holes in the ozone layer, it is literally PhD level research. A person can say eigen vector but that could also explain how light going into a prism has different colors. This is because refraction in the prism itself allows for different vectors. Then based on the length of the vector there are different colors/wavelengths of light. ie., a prism is one form of an eigen vector. p.s., some people do not like hybrids. This is because people dislike change. It is like saying матьполе = motherboard which it does ≠. That also represents change of a different nature. Edited on 21-12-2021 18:58 |

| 21-12-2021 19:21 | |

| IBdaMann (14395) |

James___ wrote:With holes in the ozone layer, it is literally PhD level research. Ozone holes are material for eighth-graders. OK, ok ... seventh graders. James___ wrote:A person can say eigen vector but that could also explain how light going into a prism has different colors. The famous sculptor was asked how he created such marvellous sculptures and he responded that the sculptures are already there, that he simply chisels away the extraneous stone around it. The prism was asked how he created such brilliant colors and he responded that the colors were already there within the white light, that he simply refracts the separate frequencies. James___ wrote:This is because refraction in the prism itself allows for different vectors. So does peanut butter. So do Doritos cheesy chips. In fact, everything allows for different vectors. I don't know why you insist on using that phrase in virtually every post because it renders whatever you are trying to say as completely meaningless. James___ wrote:p.s., some people do not like hybrids. This is because people dislike change. Nope. It's because people generally dislike shitty cars. . |

| 21-12-2021 21:10 | |

| James___★★★★★ (5513) |

ellisael wrote: welcome to the forum. Some of the people in here can be a bit challenging so you shouldn't worry too much about what they say. |

| 21-12-2021 23:34 | |

| Into the Night (21592) |

James___ wrote:Into the Night wrote:IBdaMann wrote:James___ wrote:p.s., I bought my p.c. because it's SSD with a graphics card with a couple MB On board GPUs have no problem rendering 3D. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 21-12-2021 23:37 | |

| Into the Night (21592) |

James___ wrote: Nope. During the winter. James___ wrote: The Van Allen belts are not in the atmosphere. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

| 21-12-2021 23:38 | |

| Into the Night (21592) |

James___ wrote: Random gibberish. No apparent coherency. The Parrot Killer Debunked in my sig. - tmiddles Google keeps track of paranoid talk and i'm not on their list. I've been evaluated and certified. - keepit nuclear powered ships do not require nuclear fuel. - Swan While it is true that fossils do not burn it is also true that fossil fuels burn very well - Swan |

Join the debate Tornados 2 Weeks Before Christmas?:

Related content

| Threads | Replies | Last post |

| Ho Ho Ho, did everyone send in their Christmas list? A few ground rules though | 2 | 28-12-2023 19:16 |

| Merry Christmas, rejoice for a child is born to guide the stringing of Chinese made sweatshop lights | 0 | 25-12-2022 14:35 |

| Christmas | 21 | 29-12-2021 20:24 |

| Count Dracula, Christmas, Batman and global warming | 19 | 29-12-2019 21:42 |

| I propose a weeks silence - due to the current outbreak of Socialism in Ventura CA. | 5 | 19-12-2017 02:59 |